“Why are many of your cybersecurity maps missing user considerations?” A fair challenge

“Why are many of your cybersecurity maps missing user considerations?” A fair challenge

A few days ago, I had the privilege of running a session at MapCamp (an annual event for Wardley mappers where we learn from applications of Wardley mapping in both Government and Industry from some of the greatest minds I’ve had the pleasure of meeting) where I showed a decent audience of people some of the maps applied to Cyber Security I’ve been producing in the last year-ish.

Half-way through the session, a lady in the audience asked why my maps tended to be low on considerations for the user or what it means to the users (I didn’t probe too much in the interest of time, and also because I hadn’t thought too much about it before, not like that at least) so I gave the obvious answer which is also correct:

“If looking at the themes and content of my maps, the anchors I tend to choose are more technical in nature so I don’t believe it would add much to them to bring more complexity to them”. (at least that’s how I recall/think I’ve answered it :))

However, the question stood with me as I felt I hadn’t provided an answer that convinced even myself so I’ve been thinking about it.

I think I now came up with 3 reasons why that is the case and this blog goes on to explain them. The 3 reasons are:

- I refuse to treat users like “Dave”

- I won’t be part of the overall “awareness mess”

- I don’t yet understand complexity science and application to social science well enough to do positive contributions

Let me explain what I mean by each in more detail:

I refuse to treat users like “Dave”

I’ll be the first to admit (and if you know mean without this pre-amble you’d be surprised) that generally speaking, I’m not the biggest fan of the “people” category. A big part of that, is my own upbringing and having grown poor and struggling with obesity most of my life (things a lot of you may relate to), and whilst those are fixed now I often get asked for advice/support on dealing with those, and experience tells me people say things but don’t follow through, and with the current political landscape across the world, for me it’s sometimes hard not to default to that.

However, I’m self-aware enough to try and catch myself with those thoughts, namely in my writing forms as just default to “the user is stupid” is not going to help anyone move forward or the overall landscape to improve. If that’s all I could bring to the table, I’d be better off staying out of it.

I won’t be part of the overall awareness mess

Security awareness has come a long way in the last decade, and I’m glad we see a shift in the industry on working WITH our users to achieve a more secure state, instead of just focusing on designing systems and processes which focus solely on creating constraints for the user experiences.

Nancy Leveson argued about a decade ago, that a system which fails (and our cyber security protections fail a lot) under operator error is a system in need of re-design.

But unfortunately, as an industry, we’re still overly focused on engineering from “the screen in” (ie the tin/virtual tin we configure comprised of systems, networks and controls) than we are focusing on “screen out” (ie we KNOW we have users, operators and privileged operators and fully designing both technology and process for their failure modes).

Though there’s increasing good security awareness out there, I feel that most of it rests on overly simplified models or activities narrowly focusing on addressing specific concerns (ie don’t click that link, check recipients on emails) or in trying to manage incentives and consequences (“have this for good behaviour” or “you’ve been naughty so now we need to make an example of you”). I’m not going to be a part of this if I can avoid it.

Overly simplistic approaches to dealing with the human factor of cyber security will either produce inconsistent results, or lead us down the silly path of arguing causality-correlation based on opinions, anecdotes or gut-feels and that’s just not me. You can find out there others happy to do it though, if that’s what you’re after.

I don’t yet understand complexity science and its application to social sciences well enough to do positive contributions

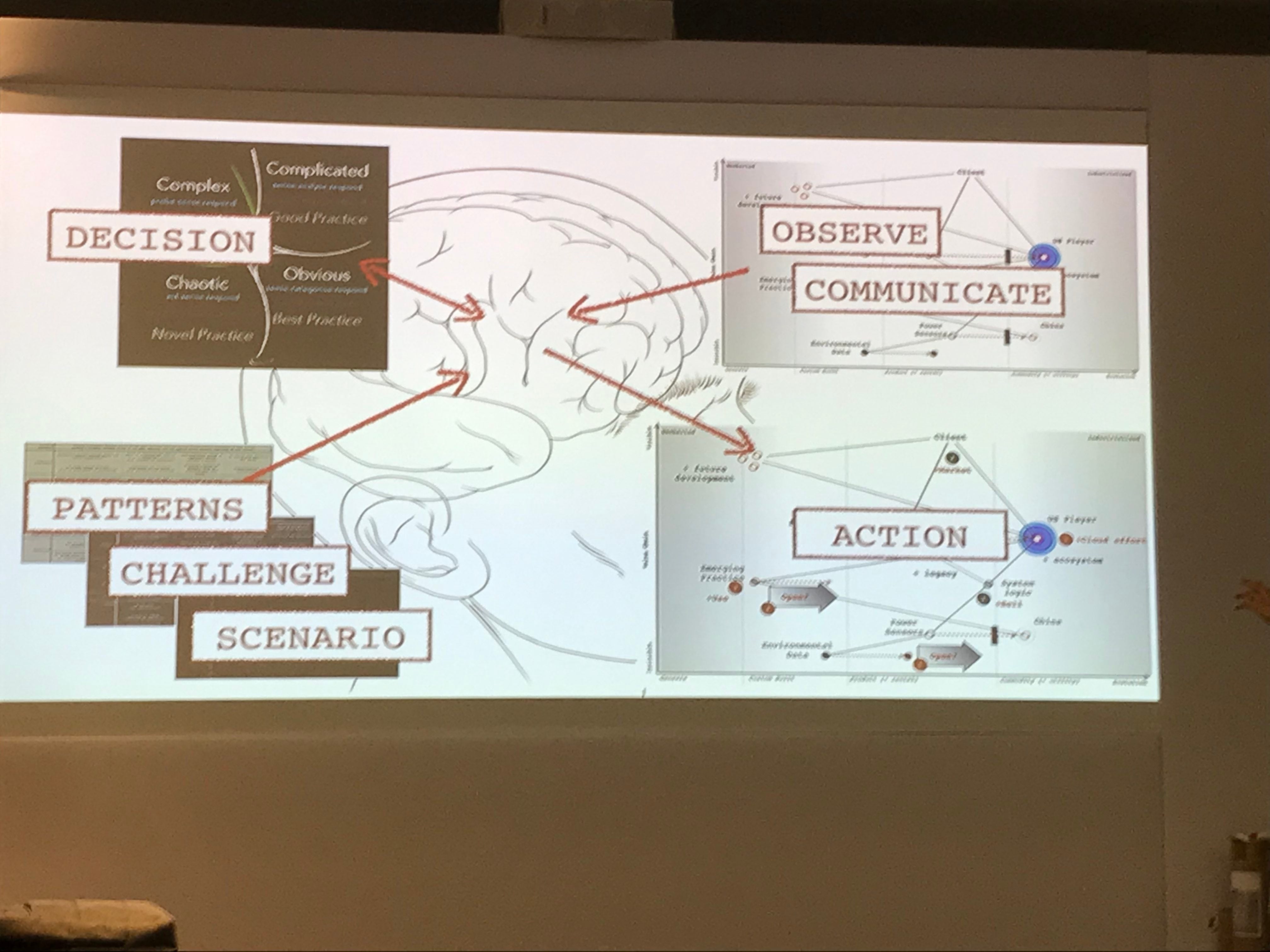

I’ve known the short versions of Complexity science for a while now, particularly Cynefin framework. As many of you may know, Dave Snowden (Cynefin framework) and Simon Wardley (Wardley mapping) have been touring the planet together talking about both of their frameworks.

Cynefin framework deals with what’s been coined as Anthro-complexity, which is about “understanding the dispositional state of the present, control future state by modulation, vector measurement as possible & coherent”, and it’s about that humans are very complex (both inputs and outputs) and that other frames (Scientific management, Systems thinking or Computational Complexity as in AI) we may apply to them will either oversimplify or lead us down the path of wrong solutions and/or chaos.

Because I’m not yet versed in this but appreciate the complexity of it all, I’ve stayed away from contributing as I can’t see how I could help advance or improve the field so I’ve stayed away from doing it.

Closing thoughts

The great thing about being self-aware enough to understand our limitations, is that it empowers us to do something about it. In this case, to learn more about anthro-complexity so that I can hopefully start contributing my views in that space as I’ve been trying to expose more Cyber professionals to Wardley mapping and Business people into maps depicting Cyber scenarios to Business people.

The good news, is I’m now on my 3rd week of a 12 week course on Cynefin framework, so hoping that early next year I’ll be in the position to start contributing to that which, though I certainly didn’t mention to the lady making the challenge, is something definitely worth pursuing and that we need more of.