Rasmussen’s Systemic Risk Modelling and Cyber Security

I first became acquainted with Rasmussen’s work at the beginning of last year by watching Dr. Cook’s talk on Velocity 2013 (links at the bottom). But had to be told about 3-4 months ago to review it to actually click on me, and more than that pique my curiosity to go look for the original papers by Dr. Rasmussen (again, links at the bottom) and his original paper titled “Risk Management in a dynamic society: a modelling problem” for me to re-evaluate how refreshing this is, even though it’s dated as 1997 (as such good things in Safety Management we’re yet to learn more about in Cyber Security).

This blog post isn’t meant to replace watching Dr. Cook’s talk or even reading the original paper which is full of wisdom and insights, but my little way of letting Infosec audience know of its existence and consider some of the learnings we can take from it.

Let’s start with Risk Modelling

In my own words, Rasmussen’s approach to management of risk is truly systemic. There are 2 quotes on the paper which summarise this better than I ever could, so I’ll just paste it:

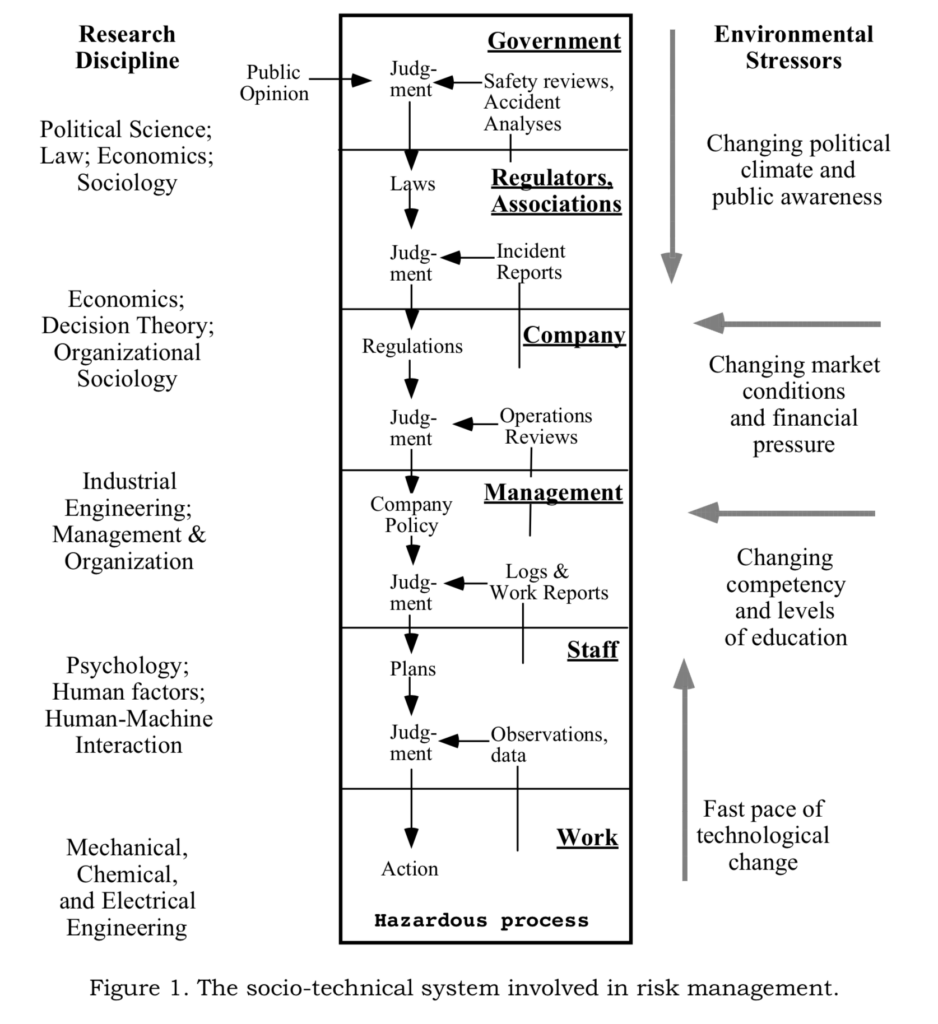

The socio-technical system involved in risk management includes several levels ranging from legislators, over managers and work planners, to system operators. This system is presently stressed by a fast pace of technological change, by an increasingly aggressive, competitive environment, and by changing regulatory practices and public pressure.

Jens Rasmussen

In my own words, this level of complexity in the management of risk goes all the way from the increasing government and industry cyber certification schemes and regulatory expectations all the way to the level of knowledge workers (not just Engineers and Developers, but also Call Centre or IT operations staff). This “fast pace of technological change” hasn’t decreased, on the contrary, if anything it’s getting ever faster and the competitive environment following suite with data breaches being a reality we can’t oversee and the public scrutiny and pressure which arises from it.

The other quote is (highlighting is mine)

It is argued that this requires a system-oriented approach based on functional abstraction rather than structural decomposition. Therefore, task analysis focused on action sequences and occasional deviation in terms of human errors should be replaced by a model of behaviour shaping mechanisms in terms of work system constraints, boundaries of acceptable performance, and subjective criteria guiding adaptation to change

Jens Rasmussen

A lot of the current approaches to management of risk in cyber security rely on a structural decomposition approach in that we identify all the elements which play a role in the operation and/or security of a platform and then determine (typically) technical controls supported by procedures or processes to be followed by staff in order to control the risk of incidents of breaches. The challenge with this approach, is that we’ll tend to name any deviation from those procedures (typically written in a vacuum without understanding the required variability of daily operations which is inherent and integral to the success of our operations) as “human errors” and as such we’ll always find one, because those detailed procedures typically assume conditions that are seldom experienced in the day to day work of operators (ie an environment where there are no other economic or functional pressures placed on them).

What Rasmussen proposes instead is a risk model which considers the constraints of work systems (the different pressures acting on it), boundaries for acceptable performance in different domains and appreciating that typical compliance-based approach based on procedures and processes make it too common to be non-compliant in the regular operation of our systems and does little to inform based on heuristics and subjective criteria which could enhance or heighten attention by operators that could in fact avoid accidents, incidents or breaches.

(highlights mine)

To complete a description of a task as being a sequence of acts, these degrees of freedom must be resolved by assuming additional performance criteria that appear to be ‘rational’ to instructors. They cannot, however, foresee all local contingencies of the work context and, in particular, a rule or instruction is often designed separately for a particular task in isolation whereas, in the actual situation, several tasks are active in a time sharing mode. This poses additional constraints on the procedure to use, which were not known by the instructor. In consequence, rules, laws, and instructions practically speaking are never followed to the letter

Jens Rasmussen

Because our “security Processes” are often such a small part of the “operational PRACTICES” that operators perform, and not discreet well separated activities as our processes imagine or assume, it’s very likely that “instructions practically speaking are never followed to the letter”.

This variability that is found in normal operator work, is there and it will always be there. It’s a huge contributor daily for the success of our operation, and sometimes also a contributor to incidents and breaches.

So our risk modelling needs to cater for these, and this means we need to treat humans as humans which have a number of degrees of freedom to perform work tasks which are part of organisational practices and which operate in a context with competing goals and constraints.

Human behaviour in any work system is shaped by objectives and constraints, which must be respected by the actors for work performance to be successful. Aiming at such productive targets, however, many degrees of freedom are left open which will have to be closed by the individual actor by an adaptive search guided by process criteria such as work load, cost effectiveness, risk of failure, joy of exploration, etc. The work-space within which the human actors can navigate freely during this search is bounded by administrative, functional, and safety related constraints.

Jens Rasmussen

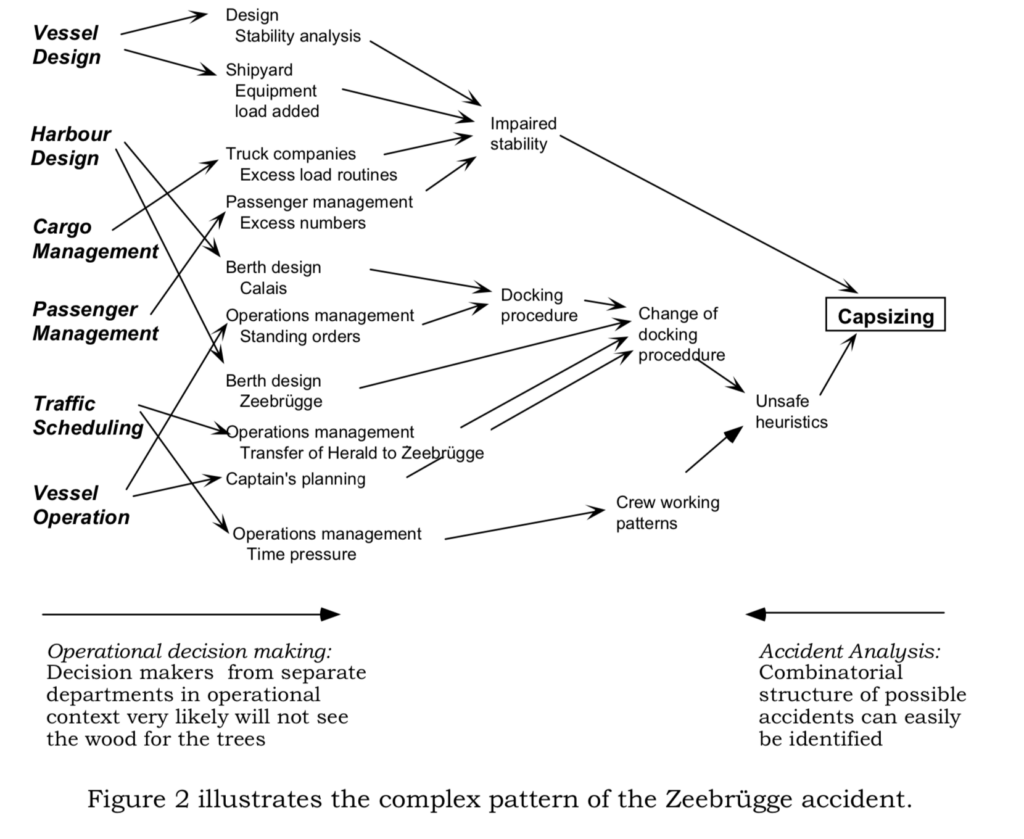

The complexity and multiple contributing factors to incidents

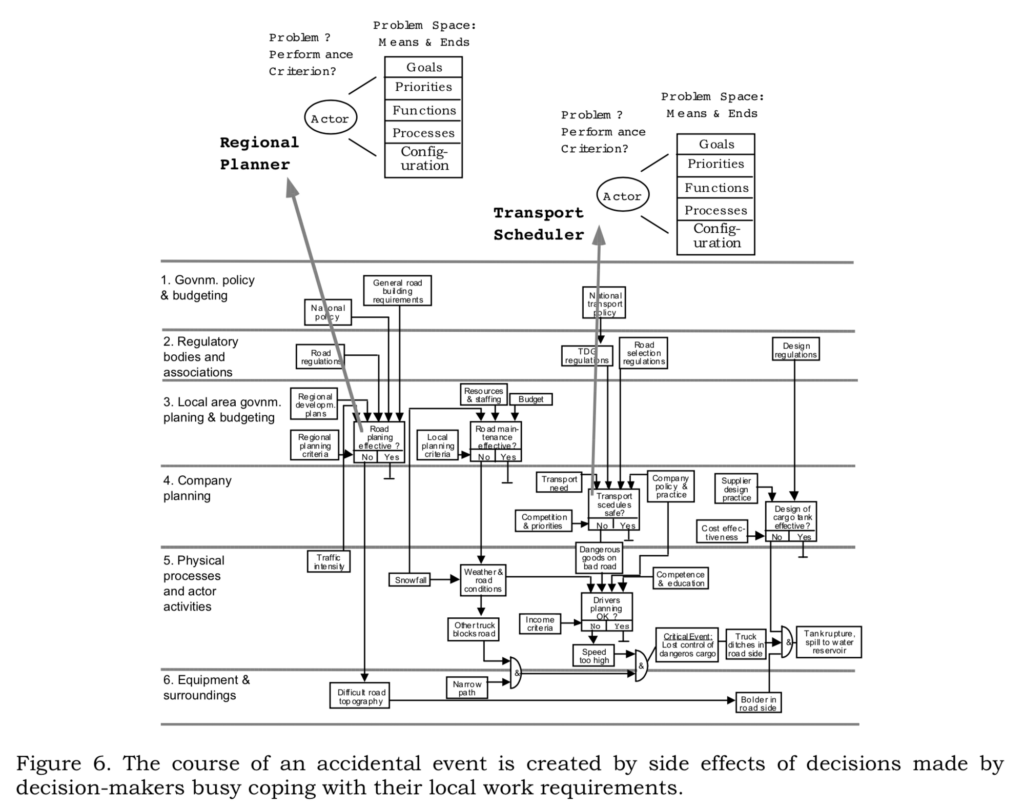

In Cyber, we often love to be linear and simplistic with regards to data breaches. “He was hacked because he didn’t patch Apache Struts” or “they were hacked because they didn’t terminate the account”. Those are often significant contributing factors, but while we take the linear approach to looking at incidents and breaches this way, we’re leaving most of the learnings on the table, as we blind ourselves to understand the characteristics of the system which led to the creation and operation of insecure software. It’s not an “Engineering problem”. It’s a whole organisation that contributes to the creation and operation of insecure software. Jus that “one thing alone” we point to, rarely tells the whole story of what happened to produce the result we now see and rationalise with the benefit of hindsight. “It takes a village”

What I’m not saying

Sometimes this type of arguments are perceived as dichotomies, between “all you’re doing now is wrong” and “this is the enlightened way to do this” and for sure, that’s not what I’m arguing for or about.

I’d highly recommend this blog post by Dave Snowden (in particular the first 3 paragraphs) on the perils of such approaches to anything.

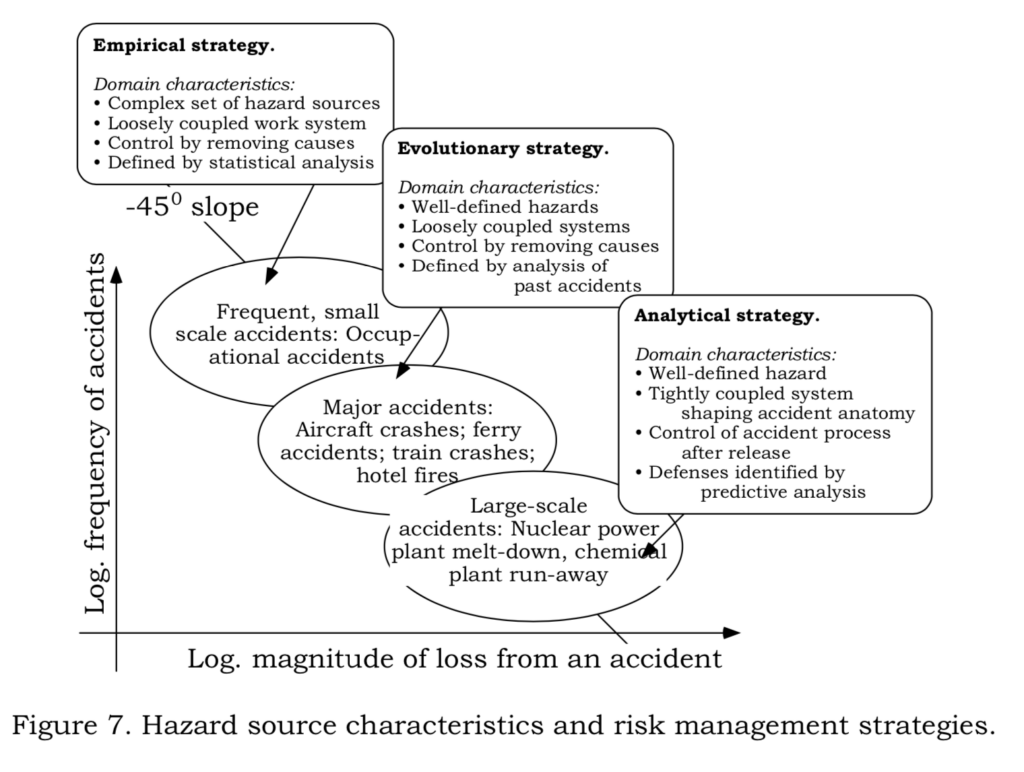

What I am saying is that most of our current approaches are optimised for dealing with a particular spectrum of risk consequences and is attempting to apply a single method to a multi-disciplinary problem affecting multiple stakeholders across multiple timespans, and that’s a contributing factor to why we’re not evolving our practices at a pace that puts ahead of the evolution of threats we face.

How do we connect the dots then ?

Dr. Rasmussen proposes an approach in that we apply different risk management strategies considering both frequency and magnitude of accidents that I believe can really apply to Cyber Security as well.

Empirical strategy – where we can apply simple controls, things happen often so it’s simple to study and discuss with operators best approaches and heuristics to control them.

Evolutionary strategy – where we can analyse past events and accidents, and understand how different parts of the systems interacted to produce the conditions which led to an incident

Analytical Strategy – the hazard itself is well defined (a data breach for instance) but the entanglements on the system are numerous, and it requires a real understanding of this entanglement and relationships and how failures in one part of the system can affect other parts of the system.

In my next blog post I’ll be looking specifically at Rasmussen’s System Model and it’s 3 boundaries, and how we can perceive Infosec and Cyber Security through that lens to better understand and appreciate our operating points and how they fluctuate towards incidents.

References

Dr. Richard Cook, Velocity 2013 “How Complex systems fail” https://www.youtube.com/watch?v=PGLYEDpNu60

Jens Rasmussen, “Risk management in a dynamic society: a modelling problem” https://www.sciencedirect.com/science/article/abs/pii/S0925753597000520